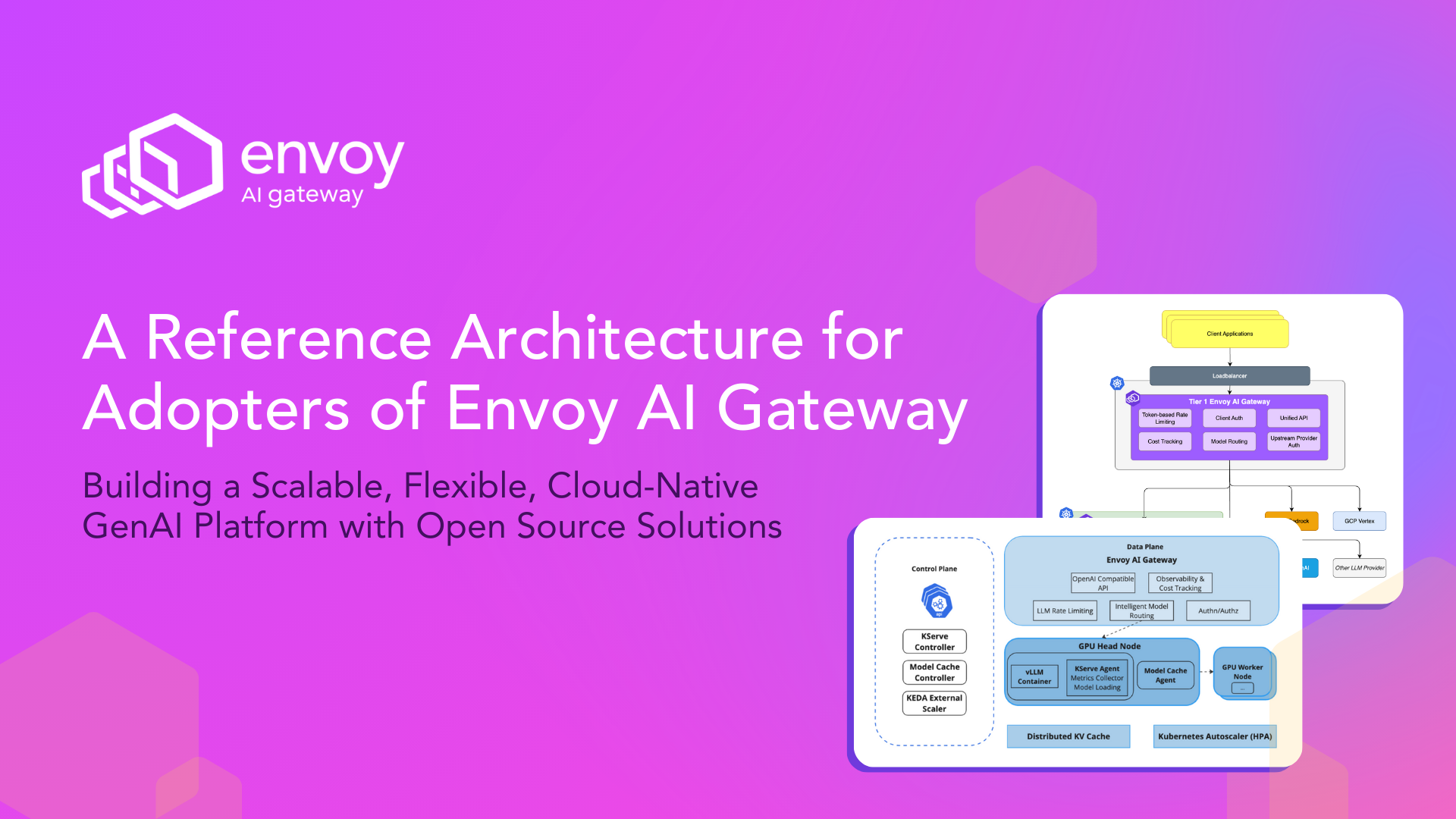

A Reference Architecture for Adopters of Envoy AI Gateway

· 7 min read

Building a Scalable, Flexible, Cloud-Native GenAI Platform with Open Source Solutions

AI workloads are complex, and unmanaged complexity kills velocity. Your architecture is the key to mastering it.

As generative AI (GenAI) becomes foundational to modern software products, developers face a chaotic new reality, juggling different APIs from various providers while also attempting to deploy self-hosted open-source models. This leads to credential sprawl, inconsistent security policies, runaway costs, and an infrastructure that is difficult to scale and govern.

Your architecture doesn’t have to be this complex.